The Path to AI-Ready Regulatory Affairs

How a Small RA Team Should Measure AI Impact, Choose the Right Level of Adoption, and Navigate Transition

After laying out the five levels of AI adoption, the next natural question is: how do you measure the impact, and how do you know which level fits your team?

If you’re running a small RA team, three people, heavy workload, and a long list of markets to open, you don’t have the luxury of experimenting endlessly. You need to know what works, what moves the needle, and what’s noise.

Here’s a practical framework that cuts straight to it.

1. So… what should you measure? (And what actually matters?)

Different levels of AI adoption yield varying types of value.

Trying to apply the same KPIs to all of them creates confusion.

Let’s break it down.

Level 1 – Basic Productivity

This is the “ChatGPT on the side” phase. You’re not automating work; you’re speeding up thinking.

What to measure:

- How much faster are tasks completed

- Reduction in back-and-forth communication

- Time saved on summaries and drafting

- Internal clarity and consistency

Simple but useful. Think of it as warming up the engine.

Level 2 – Structured Assistance

Now the team starts using templates, guided prompts, and standardized RA structures.

What to measure:

- Time to first draft of labeling, GSPR, risk files

- Drop in rework or formatting corrections

- Consistency between team members

- Percentage of documents that start from an AI-supported structure

This is the first “real” performance level. You start seeing repeatable results.

Level 3 – Integrated Workflow Automation

Here, AI steps into the daily workflow.

Research, comparisons, and checklists; the groundwork is starting to get automated.

What to measure:

- Time to assemble a complete submission package

- Time spent researching each market

- Number of markets handled per quarter

- Percentage of tasks dealt with by agents instead of humans

- Internal vs external hours (consultants)

This level is where things really start moving at a faster pace.

Level 4 – Full Enablement

The AI doesn’t just help; it participates. It drafts large parts of the dossier and predicts issues.

What to measure:

- End-to-end submission cycle times

- Document completeness on first internal review

- Authority questions predicted vs actual

- Consultant hours avoided (this one is always fun to track)

- Markets handled per team member

Now your small team starts performing like a whole department.

Level 5 – RA Digital Twin

This is the “autonomous RA intern that never sleeps” level.

Most of the groundwork is automated.

What to measure:

- Percentage of documents auto-generated

- Speed of change-impact assessments

- Requirements updated automatically vs manually

- Time to “submission-ready” after receiving inputs

- Submission accuracy (first-pass completeness)

This level changes not just performance; it changes the whole budget structure.

2. How does a small RA team know which AI level fits them?

I’ve seen teams jump too high too fast, and others stay too low because they don’t yet trust the technology.

The decision is actually simpler than it looks.

Focus on three things:

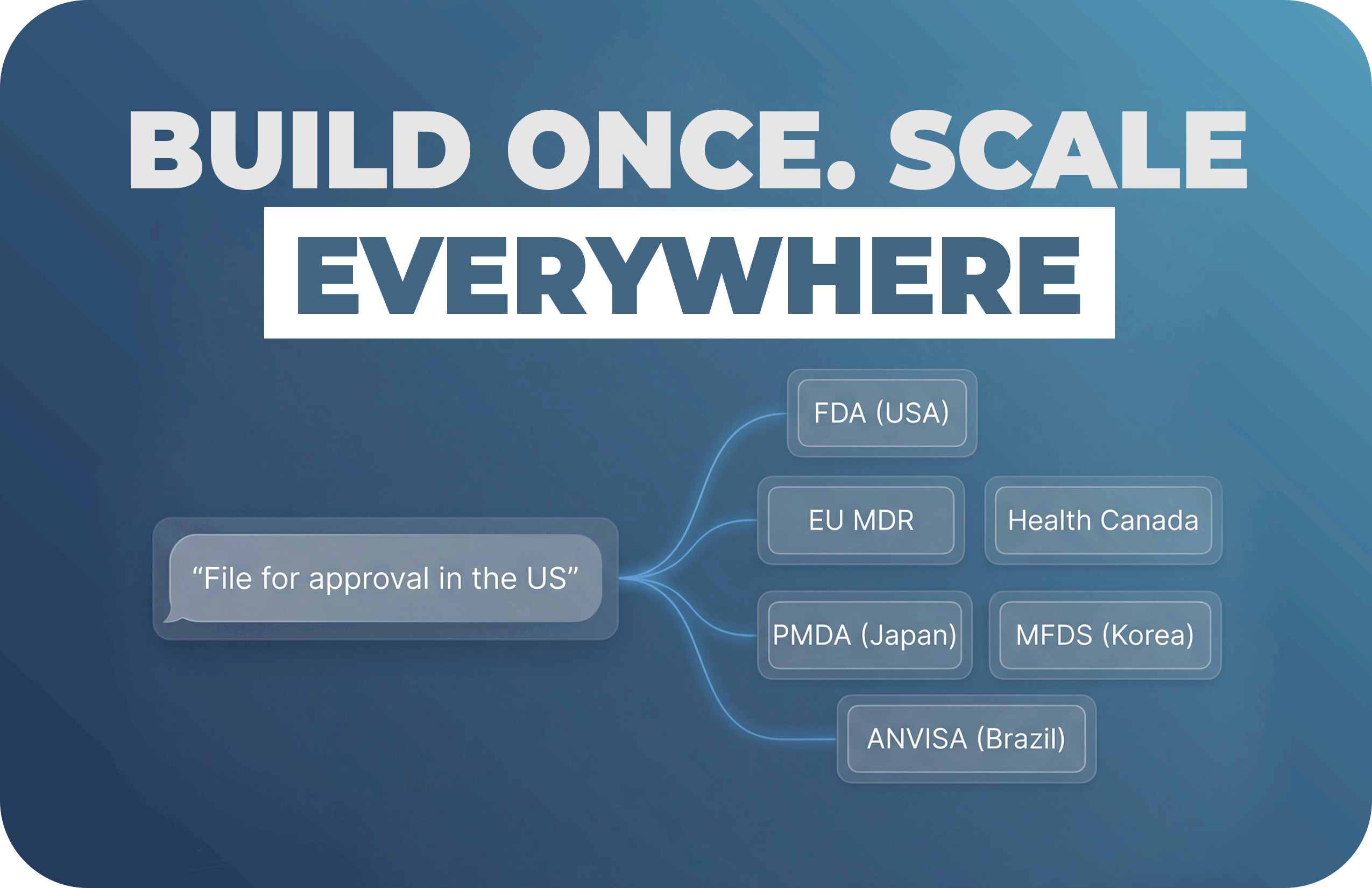

Your 2026 workload

Expansion plans should drive the AI level, not the other way around.

- If you’re entering 3–5 markets, Level 2–3 is plenty

- If you’re entering 5–10 markets, Level 4 is your sweet spot

- If you’re entering 10+ markets, you’ll need Level 5 to stay sane

AI must match the reality of your workload.

How organized your internal data is

If your documents, product information, and templates are clean and consistent, you can quickly advance to Level 3–4.

If not?

Start with Level 2 and clean your base first.

AI can scale a mess, and you do not want that.

Your resources and risk tolerance

Let’s be honest, every team has different constraints.

- If your team is overwhelmed → Level 3

- If timelines keep slipping → Level 4

- If you want predictable, scalable submissions → Level 5

- If you’re experimenting → Level 1–2

The right level is the one that yields meaningful progress without overstressing the team.

3. Common challenges when moving between AI levels, and how to handle them

Moving to Level 2:

Problem: No templates, no standardization, and everyone writes in their own style.

Effect: AI ultimately amplifies inconsistency.

Fix: Get your templates straight. Even a light cleanup helps massively.

Moving to Level 3:

Problem: People lack trust in AI-generated research and comparisons.

Fix: Start with AI as the assistant.

Let humans review everything at first.

Trust builds naturally once the team sees consistent accuracy.

Moving to Level 4:

Problem: Product data, labeling data, and risk data are scattered across 20 places.

Fix: Create one “source of truth.”

This is the backbone for every automated submission.

Moving to Level 5:

Problem: Fear.

People worry that AI replaces judgment.

Fix: Position AI as the automation layer; the “hands,” not the “brain.”

All the real decisions stay with the team.

This shift is empowering when done right.

You may be interested in our next companion post: How to Build a 12-Month AI Roadmap for a 3-Person RA Team.